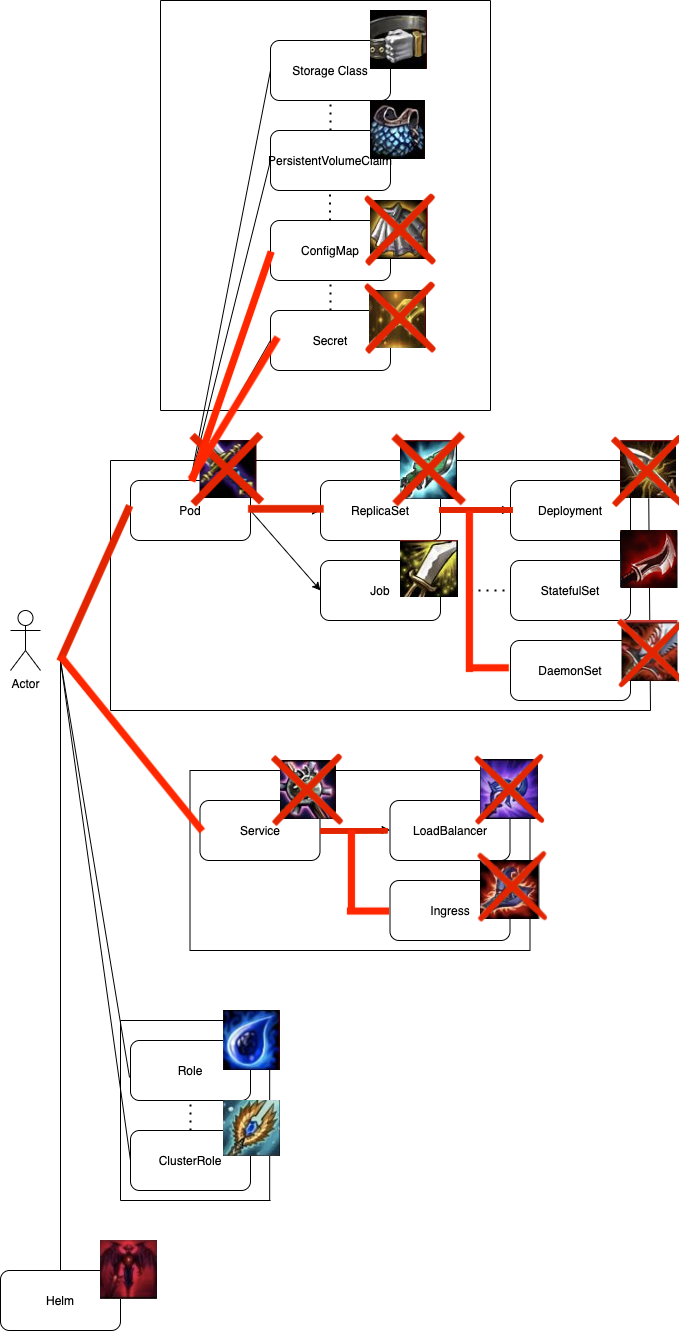

還記得我們前面所介紹的Deployment嗎? Deployment一對多的掌握ReplicaSet,ReplicaSet也一對多的掌握Pod,利用水平擴增與Replicas去處理過多的任務。但會遇到一些情境是目前Deployment所無法處理的,而DaemonSet與StatefulSet就是因應這些情況所孕育出的產物。

DamonSet會確保在所有(或是特定)節點上,一定運行著指定的一個Pod,並且每當有新的Node加入Cluster時,DaemonSet會為他們新增這指定的一個Pod,同時只要有Node被移除Cluster外,在這Node上的指定Pod也會被移除。若想只運行在特定節點,則需要配合前面章節所講到的Day-23 Affinity and Anti-Affinity與Day-24 Taints and Tolerations做使用。

最後,當DaemonSet被移除的同時,它將刪除所有由他所創建的Pod。

老規矩,我們先來段deployment轉換而成的daemonSet yaml

daemonset.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: ironman

labels:

name: ironman

app: ironman

spec:

minReadySeconds: 5

selector:

matchLabels:

app: ironman

template:

metadata:

labels:

app: ironman

spec:

nodeSelector:

app: ironman

containers:

- name: ironman

image: ghjjhg567/ironman:latest

imagePullPolicy: Always

ports:

- containerPort: 8100

envFrom:

- secretRef:

name: ironman-config

command: ["./docker-entrypoint.sh"]

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 200Mi

- name: redis

image: redis:4.0

imagePullPolicy: Always

ports:

- containerPort: 6379

- name: nginx

image: nginx

imagePullPolicy: Always

ports:

- containerPort: 80

volumeMounts:

- mountPath: /etc/nginx/nginx.conf

name: nginx-conf-volume

subPath: nginx.conf

readOnly: true

- mountPath: /etc/nginx/conf.d/default.conf

subPath: default.conf

name: nginx-route-volume

readOnly: true

readinessProbe:

httpGet:

path: /v1/hc

port: 80

initialDelaySeconds: 5

periodSeconds: 10

volumes:

- name: nginx-conf-volume

configMap:

name: nginx-config

- name: nginx-route-volume

configMap:

name: nginx-route-volume

比較deployment與daemonSet我們可以發現幾件事:

一個object,由兩個key-value組成,matchLabels與matchExpressions。

照慣例先刪除default namespace下所有的pod resource,來個乾淨的測試環境。

$ kubectl get pod

No resources found in default namespace.

決定要部署的node,這邊我們預期只在兩個Node上運行pod,所以只在兩個node上新增label app=ironman,並且yaml再配合nodeSelector使用。

$ kubectl get node

NAME STATUS ROLES AGE VERSION

gke-my-first-cluster-1-default-pool-dddd2fae-j0k1 Ready <none> 12d v1.18.6-gke.3504

gke-my-first-cluster-1-default-pool-dddd2fae-rfl8 Ready <none> 12d v1.18.6-gke.3504

gke-my-first-cluster-1-default-pool-dddd2fae-tz38 Ready <none> 12d v1.18.6-gke.3504

$ kubectl label nodes gke-my-first-cluster-1-default-pool-dddd2fae-j0k1 app=ironman

node/gke-my-first-cluster-1-default-pool-dddd2fae-j0k1 labeled

$kubectl label nodes gke-my-first-cluster-1-default-pool-dddd2fae-rfl8 app=ironman

node/gke-my-first-cluster-1-default-pool-dddd2fae-rfl8 labeled

一樣透過kubectl來部署daemonSet,並且發現確實daemonSet只將pod部署在兩個有label的節點上。

$ kubectl apply -f daemonset.yaml

daemonset.apps/ironman created

$ kubectl get daemonset --watch

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

ironman 2 2 0 2 0 app=ironman 10s

ironman 2 2 1 2 0 app=ironman 22s

ironman 2 2 2 2 0 app=ironman 23s

ironman 2 2 2 2 2 app=ironman 28s

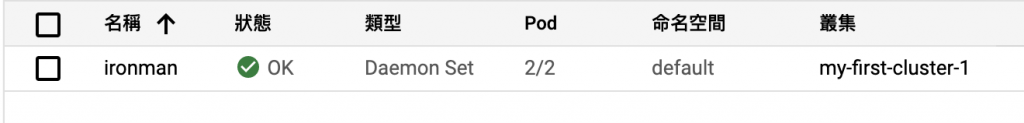

部署完後在GKE上可以看到daemonSet的狀態,並也確認在每個node上都有該pod運行

DaemonSet的pod並非由Kubernetes scheduler來選擇與管理,而是DaemonSet的Controller來創建調度,因此也帶來以下問題:

也因此v1.12後所有的DaemonSet pod調度都由default scheduler來進行。

但還是有方法能夠讓daemonSet pod由Kubernetes scheduler來管理,方法是透過nodeAffinity來做調度,而非.spen.NodeName。Kubernetes scheduler會將pod部署至特定Node上,如果node affinity of the DaemonSet pod已經存在,則會替換掉它。

Tips: 忘記nodeAffinity如何使用的讀者,請參閱Day-23 Affinity and Anti-Affinity

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchFields:

- key: metadata.name

operator: In

values:

- target-host-name

我們來看一下剛剛創的daemonSet pod吧,我們可以看到pod確實都是將由default-scheduler來進行了。而daemonSet controller則專門管理daemonSet。

$ kubectl describe pod ironman-jtntw

...

..

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 21s default-scheduler Successfully assigned default/ironman-jtntw to gke-my-first-cluster-1-default-pool-dddd2fae-j0k1

Normal LoadBalancerNegNotReady 21s (x2 over 21s) neg-readiness-reflector Waiting for pod to become healthy in at least one of the NEG(s): [k8s1-11092c60-default-ironman-80-859c8e3a]

Normal Pulling 21s kubelet, gke-my-first-cluster-1-default-pool-dddd2fae-j0k1 Pulling image "ghjjhg567/ironman:latest"

Normal Pulled 18s kubelet, gke-my-first-cluster-1-default-pool-dddd2fae-j0k1 Successfully pulled image "ghjjhg567/ironman:latest"

Normal Created 18s kubelet, gke-my-first-cluster-1-default-pool-dddd2fae-j0k1 Created container ironman

Normal Started 18s kubelet, gke-my-first-cluster-1-default-pool-dddd2fae-j0k1 Started container ironman

Normal Pulling 18s kubelet, gke-my-first-cluster-1-default-pool-dddd2fae-j0k1 Pulling image "redis:4.0"

Normal Pulled 14s kubelet, gke-my-first-cluster-1-default-pool-dddd2fae-j0k1 Successfully pulled image "redis:4.0"

Normal Created 14s kubelet, gke-my-first-cluster-1-default-pool-dddd2fae-j0k1 Created container redis

Normal Started 14s kubelet, gke-my-first-cluster-1-default-pool-dddd2fae-j0k1 Started container redis

Normal Pulling 14s kubelet, gke-my-first-cluster-1-default-pool-dddd2fae-j0k1 Pulling image "nginx"

Normal Pulled 14s kubelet, gke-my-first-cluster-1-default-pool-dddd2fae-j0k1 Successfully pulled image "nginx"

Normal Created 14s kubelet, gke-my-first-cluster-1-default-pool-dddd2fae-j0k1 Created container nginx

Normal Started 13s kubelet, gke-my-first-cluster-1-default-pool-dddd2fae-j0k1 Started container nginx

Normal LoadBalancerNegReady 2s neg-readiness-reflector Pod has become Healthy in NEG "Key{\"k8s1-11092c60-default-ironman-80-859c8e3a\", zone: \"asia-east1-a\"}" attached to BackendService "Key{\"k8s1-11092c60-default-ironman-80-859c8e3a\"}". Marking condition "cloud.google.com/load-balancer-neg-ready" to True.

此外,系統會自動添加label為node.kubernetes.io/unschedulable:Noschedule 的容忍度到Daemonset中的

Pods。在調度DaemonSet Pod時,默認調度器會忽略unschedulable節點。

DaemonSet controller會自動將以下的tolerations加進DaemonSet Pod當中

| Toleration | Effect | Version | Description |

|---|---|---|---|

| node.kubernetes.io/not-ready | No Execute | 1.13+ | 當出現類似網絡斷開的情況導致節點問題時,DaemonSet Pod 不會被逐出。 |

| node.kubernetes.io/unreachable | No Execute | 1.13+ | 當出現類似於網絡斷開的情況導致節點問題時,DaemonSet Pod 不會被逐出。 |

| node.kubernetes.io/disk-pressure | No Schedule | 1.8+ | |

| node.kubernetes.io/memory-pressure | No Schedule | 1.8+ | |

| node.kubernetes.io/unschedulable | No schedule | 1.12+ | DaemonSet Pod能夠容忍默認調度器所設置的unschedulable屬性. |

| node.kubernetes.io/network-unschedulable | No Schedule | 1.12+ | DaemonSet在使用宿主網絡時,能夠容忍默認調度器所設置的network-unavailable屬性。 |

與DaemonSet 中的Pod 進行通信的幾種可能模式如下:

hostPort,從而可以通過節點IP訪問到Pod。客戶端能通過某種方法獲取節點IP列表,並且基於此也可以獲取到相應的端口。endpoints資源或從DNS中檢索到多個A記錄來發現DaemonSet。這邊要特別提出來講Headless Service

這邊我們先來複習一下原有的Service是如何運作(可參考Day-21 Service Kube-dns and Kube-proxy)

那Headless Service又有什麼不同呢?

headless_service.yaml

apiVersion: v1

kind: Service

metadata:

name: ironman

labels:

app: ironman

spec:

clusterIP: None

ports:

- name: ironman

protocol: TCP

port: 80

targetPort: 80

selector:

app: ironman

主要差別就在於clusterIP為None

kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ironman ClusterIP None <none> 80/TCP 2s

那麼沒有clusterIP又有何差別呢?

一般來說 StatefulSet才會經常用到Headless Service!

本篇章所有程式碼將放在下面的github project當中的branch day-25

DaemonSet的篇章就到這邊結束了,也希望能夠拋磚引玉,讓大家使用DaemonSet來像是蒐集log,避免避開非Worker在運行的Node..等。在後面章節也會在介紹StatefulSet,敬請期待!

https://kubernetes.io/docs/concepts/workloads/controllers/daemonset/

抱歉,請問一下

所以 v1.12 之後 Pod Scheduling 預設是回到由 default scheduler來調度?之後卻又寫說"但還是有方法能夠讓daemonSet pod由 Kubernetes scheduler 來管理...",所以預設的 Pod Scheduling 是 k8s scheduler(在我理解中 Kubernetes scheduler = default scheduler) ?還是 DaemonSet controller 但可以修改 yaml 改用 k8s scheduler ?